Virtual Desktop Platform Shootout, Part 2 of 4

Other Related Blog Posts: 1 of 4; 3 of 4; 4 of 4

Author: Jamie Engelhard, CTO

Contributor: Michael Rocchino, IT Support Specialist

Welcome back! Last time we introduced the series and provided the background and rationale for our VDI shootout. With the introductions out of the way, let’s get right into the meat of the matter! First, we need to provide some details about the test environment, starting with the platforms used to host the VDMs.

For this first phase of our testing we are purely focused on comparing Citrix XenDesktop 7.6 to VMware Horizon 6, so for the sake of consistency we will serve both environments using a single hosting platform: the hyper-converged NX-3460 Virtual Computing Platform from Nutanix. But wait, what is this hyper-convergence anyway? To attempt to answer that, we first need to take a brief detour and reflect on the most common data center implementations still in use today.

Today’s Data Center Architecture is Yesterday’s News

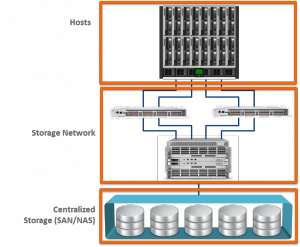

Using technology which is more than a decade old, most businesses still maintain a 3-tiered architecture in the data center. With this model the Server Hosts, Storage Area Network (SAN), and Storage Devices are each complex, independent, and highly specialized OEM hardware platforms that are loosely connected using vendor-specific implementations of open standards. A typical 3-tier data center will look something like this:

Each of these layers is its own complex system and while they are connected together, they are not truly integrated nor are they completely aware of one another. This fading model still works – sure – but it was developed for use in an era of physical servers, and large-scale virtualization of the datacenter has exposed its inherent limitations. At scale and over time, these systems suffer in terms of limited scalability, hidden performance bottlenecks, administrative complexity, and a lifecycle that includes periodic and costly rip-and-replace upgrades. Faced with these shortcomings, the early Web 2.0 companies like Google and Facebook needed a better approach. The market did not offer an alternative solution at the time, so they really had to no choice but to build it themselves.

The Birth of Software-Defined Storage and Hyper-Convergence

These were not hardware companies, so they turned instead to the technology they understood best and to a critical resource they had a huge supply of: talented software developers. These companies created sophisticated algorithms to seamlessly interconnect massive farms of inexpensive commodity servers, each with local computing and storage, to create a completely distributed but centrally managed and self-aware computing and storage platform with nearly limitless scale out potential. The SAN, which provided a pivotal role in the evolution of the datacenter at the turn of the century, was quickly and completely replaced by software. Software-Defined Storage (SDS) was born and, in hindsight, the death knell was officially sounded for the reign of the SAN. Over the last few years, several companies have emerged which would leverage these pioneering SDS principals and bring these so-called “hyper-converged” systems to market for all to use.

Just to clear up some terminology, the “hyper” in hyper-convergence distinguishes these truly revolutionary solutions from the evolutionary step introduced by legacy storage and computing vendors of “converged” infrastructure, which are essentially a re-packaging of the same dis-integrated 3-tier technology. In converged solutions, the vendors provide pre-configured bundles of hardware and software as a single SKU which mainly simplifies procurement and support, but does little to solve the fundamental issues of scaling, integration, and disparate management. Hyper-convergence goes much further with its tight integration of the compute, hypervisor, and storage components to collapse the 3-tier architecture into a holistic solution which is simpler, more compact, and modular by design. This architecture allows incremental and massive growth by simply adding more nodes to the network.

The Road Ahead is Converged

So, hyper-convergence reduces power consumption and space, dramatically simplifies storage management, and delivers predictable scalability to massive extremes all in a software-defined solution without the need for custom, proprietary hardware. Sounds almost too good to be true? As you will see later in the series, the gap between the promise and the reality of hyper-convergence is entirely dependent on the vendor’s execution, but one thing is guaranteed: these solutions will continue to mature and improve as investment ramps up and companies compete to take advantage of the huge market potential.

IDC (International Data Group), in its “Worldwide Integrated Systems 2014–2018 Forecast,” states that the converged systems market will grow at a five-year compound annual growth rate (CAGR) of 19.6 percent to $17.9 billion in 2018, up from a value of $7.3 billion in 2013. The report “Software Defined Data Center (SDDC) Market by Solution (SDN, SDC, SDS & Application), by End User (Cloud Providers, Telecommunication Service Providers and Enterprises) and by Regions (NA, Europe, APAC, MEA and LA) – Global Forecast to 2020” forecasts that the total SDDC market is expected to grow from $21.78 billion in 2015 to $77.18 billion in 2020, at an estimated Compound Annual Growth Rate (CAGR) of 28.8% from 2015 to 2020. This projected market growth is fantastic and many competitors have entered the ring, which will ultimately benefit customers by offering more choices and lower prices.

Our Testing Platform

So, now that we have introduced the concept of hyper-converged architecture, we can provide some specifics about the environment we used for the VDI software test. As mentioned earlier, we used a Nutanix NX-3460 block as the hosting platform for both the XenDesktop and the Horizon desktop pools. The NX-3460 consisted of 4 nodes, each of which was equipped as follows:

| Category | Component | Spec |

| CPU | Processor | Intel E5-2680 v2 (Ivy Bridge) |

| Cores per CPU | 10 | |

| CPUs Installed | 2 | |

| Base Clock Speed | 2.8 GHz | |

| Cache | 25 MB | |

| Max Memory Speed | 1866 MHz | |

| Max Memory Bandwidth | 59.7 GB/s | |

| Memory | Module Size & Type | 16 GB DDR4 |

| Modules | 16 | |

| Module Speed | 1866 MHz | |

| Networking | 10 Gb Interface Type | Intel 82599EB 10-Gigabit SFI/SFP+ |

| 10 Gb Interface Qty | 2 | |

| 1 Gb Interface Type | Intel I350 Gigabit | |

| 1 Gb Interface Qty | 2 | |

| LOM Interface | Yes | |

| Disk | SSD Drives | 2 x 400 GB |

| SATA HDD Drives | 4 x 1 TB | |

| Total Hot Storage Tier | 768 GB | |

| Total Cold Storage Tier | 4 TB |

Combined, these 4 nodes comprise a cluster with the following specs:

| Category | Component | Nutanix NX-3460 |

| CPU | Total Core Count | 80 |

| Memory | Total RAM | 1024 GB |

| Disk | Total Hot Storage Tier | 3 TB |

| Total Warm Storage Tier | 16 TB |

As for software, the following versions of the core VDI components were used in our testing:

| Component | Version |

| Citrix XenDesktop | 7.6 |

| VMware View Horizon | 6.01 |

| VMware vCenter Server | 5.5.0 |

| VMware ESXi | 5.5.0 |

| Login VSI | 4.1.2 |

The Base Virtual Desktop Machine

The VDM base image was created via Physical to Virtual conversion of a desktop PC loaded with the firm’s standard image. All standard firm applications were left in the base image, except the FileSite document management plugins which would have interfered with the Login VSI script due to interception of Microsoft Office file operation commands. Contrary to many vendors’ prescribed load testing method of building a generic desktop with only Windows and Office, our approach provides a much more realistic measurement of desktop density per computing node. As a result, VSImax numbers will be lower than those published in vendors’ reference architectures and marketing materials. This guarantees that the density which can be achieved in the production environment will be in line with the results of our testing, but requires some extra effort to tweak the image for reliable test execution.

Below is a summary of the base VDM configuration.

| Component | Value |

| vCPU | 2 |

| RAM | 3.5 GB |

| OS | Windows 7 Enterprise, 32-bit |

| Office | Office 2010 |

In addition to MS Office, there were approximately 50 additional applications installed into the base VDM image, not counting the typical Microsoft prerequisite libraries and runtime components (.NET, Visual C++, XML, VSTO, etc.) Once we had created and cleaned up our base Windows image, we installed the appropriate VDI desktop components from Citrix and VMware as needed and we were ready to create our desktop catalogs / pools.

Provisioning Virtual Desktop Machines

For the purposes of our testing, we used Citrix Machine Creation Services and VMware Linked Clones. We are not going to digress into a lengthy discussion of the various methods of provisioning desktops for VDI, but suffice it to say that we used the method that is the most directly comparable in each software vendor’s solution and the method that the firm is most likely to use, in any case. We made every effort to create a level playing field on which the two solutions would compete, which meant standardizing on desktop provisioning parameters, such as:

- Linked Clones / MCS

- Dedicated / Static Desktop Assignments

- Upfront Provisioning and Auto-assignment of Desktops

- Single Datastore for Image and Clones

In addition, throttling of power operations was disabled to allow each system to power on and off desktops as quickly as possible.

Login VSI Environment and Test Parameters

The Login VSI Server components and data share were hosted on servers outside the VDI environment so as to eliminate any impact on the desktop infrastructure. For Login VSI session launchers, the firm was able to provide access to two dedicated training rooms and the PC hardware required to launch 300 LoginVSI sessions. The launcher PCs were fitted with the appropriate VDI client software for both vendors’ solutions.

All testing was performed using the Login VSI Knowledge Worker (2 CPU) Workload, and session launch timing was carefully controlled to ensure identical test timing and pace. All test users were logged into their VDM prior to testing with the “profile create” option in Login VSI to setup the local user profile and pre-initialize the user environment.

And Away We Go!

With all the background information and environment preparation taken care of, we can now get on to the testing! If you have never seen Login VSI in operation, check out the video below. Please excuse the shaky cell phone video, this was not a highly produced piece.

Next time we will deliver the results from our first phase of testing and find out which VDI software vendor’s solution provides the best virtual desktop density, boot time, and network bandwidth results.

Other Related Blog Posts: 1 of 4; 3 of 4; 4 of 4

Jamie Engelhard, CTO – Helient Systems LLC – Jamie is an industry leading systems architect, business analyst, and hands-on systems engineer. As one of the founding partners of Helient Systems, Jamie brings an immense range of IT knowledge and focuses on several key areas such as architecting fully redundant Virtual Desktop Infrastructure solutions, Application integration and management, Document Management Systems, Microsoft Networking, Load Testing and end-user experience optimization. Jamie has provided technical, sales, and operational leadership to several consulting firms and scores of clients over his 20 year career in IT. If you have any questions or need more information, please contact jengelhard@helient.com.